So, you've launched your website, but when you search for it on Google… crickets. It’s a common and frustrating problem, but don't panic. The culprit is usually something straightforward, like an indexing issue, a technical block (think a rogue noindex tag), or simply that your site is too fresh for Google to have found it yet.

The very first thing I always do is a quick site:yourdomain.com search. This simple check immediately tells you what, if anything, Google has in its index for your site.

Your First Steps in Diagnosing an Invisible Website

That sinking feeling when you search for your site and it's nowhere to be found is one I know well. But before you start spiraling down a rabbit hole of complex technical SEO, a couple of basic checks will usually point you in the right direction. It's all about a calm, methodical approach.

These first moves are your diagnostic toolkit—they’ll tell you if Google can even see your site in the first place.

The site: Search Operator

Your first port of call is Google itself. Open a new tab and type site:yourdomain.com into the search bar, making sure to replace "yourdomain.com" with your actual domain. This command is a direct instruction to Google: "Show me every single page you know about from this website."

The results—or the lack of them—give you a clear answer:

- You See a List of Your Pages: Great news! This means Google has indexed your site. Your issue isn't invisibility; it's a ranking problem. That's a different beast, but at least you know you're in the game.

- You See "did not match any documents": This is your confirmation. Google has nothing from your site in its index. Now we know we’re dealing with an indexing problem.

Verify Ownership in Google Search Console

If that site: search came up empty, your next stop is Google Search Console (GSC). This free tool is your direct line to Google, and frankly, it's non-negotiable for anyone serious about their website. It gives you the raw data on how Google bots see and process your site.

Think of Google Search Console as the diagnostic dashboard for your website's health. Without it, you're flying blind, guessing what's wrong instead of getting clear, actionable data directly from the source.

Once you verify ownership, you unlock a treasure trove of information. You can submit sitemaps, inspect individual URLs to see exactly why they aren't indexed, and check for any penalties holding you back. Setting up GSC is a foundational step, right up there with learning https://www.sugarpixels.com/how-to-build-a-website/ in the first place.

If your site and business are complete ghosts online, make sure all your bases are covered. This includes setting up a Google Business Profile. For a deeper dive, learning about getting your business on Google is a fantastic next step. These initial checks build the foundation for all the real troubleshooting to come.

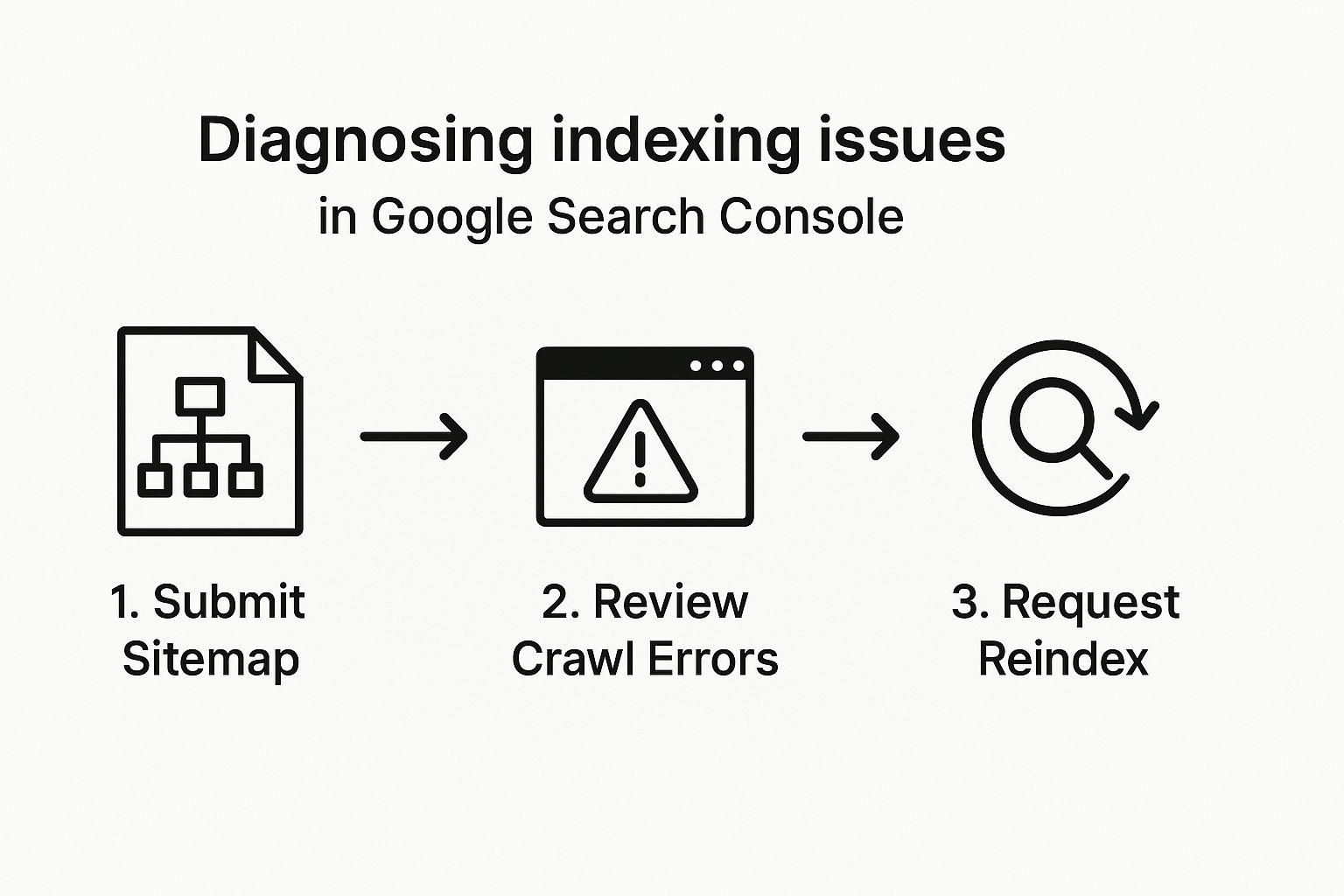

Decoding Indexing Errors in Google Search Console

If your quick site: search turns up nothing, it's time to head to the source of truth: Google Search Console (GSC). Think of GSC as the command center for your website's relationship with Google. It's a free, powerful platform that stops you from guessing and lets you see your site through Google's eyes. This is where the real diagnostic work begins.

Google’s index is an absolute behemoth, storing over 100 million gigabytes of data from hundreds of billions of pages. When your site isn't in it, there's usually a breakdown somewhere in the crawling and indexing process. It could be a technical hiccup or simply that Google's crawlers haven't gotten to you yet. Considering Google sees millions of searches every minute and that 15% of those queries are brand new, getting your site discovered is everything.

Mastering the URL Inspection Tool

The single most valuable tool for troubleshooting a specific page is the URL Inspection Tool. You'll find it right at the top of your GSC dashboard—a simple search bar that packs a serious punch.

Just paste the full URL of the missing page into the tool and hit enter. In seconds, GSC will pull a real-time report directly from the Google Index, telling you exactly what's going on. It’s the fastest way to get answers.

Here’s a look at the main GSC dashboard, your starting point for any investigation.

The report from the URL Inspection Tool gives you two clear outcomes:

- URL is on Google: This is good news! It means the page is indexed and can appear in search results. If you're still not seeing it, the problem is likely related to ranking and keywords, not indexing.

- URL is not on Google: This confirms you have an indexing problem. The tool won't leave you hanging, though; it will provide a specific reason why the page is missing, which is your clue for what to fix next.

From this screen, you can see crucial details like the last crawl date, how Google discovered the page, and if it's being blocked by a noindex tag or a robots.txt rule. Once you've fixed an issue on the live page, you can come right back here and click “Request Indexing” to tell Google to take another look.

Interpreting the Page Indexing Report

While the URL Inspection Tool is your go-to for individual pages, the Page Indexing report gives you the 30,000-foot view. You can find it under the 'Indexing' section in the left-hand menu. This report splits all your site's URLs into two main groups: Indexed and Not Indexed. Your job is to dive into the "Not Indexed" bucket and figure out why.

The table below breaks down some of the most common indexing roadblocks you'll see in GSC and gives you a clear starting point for each.

Common Indexing Issues and First Steps to Fix Them

| Indexing Issue | Potential Cause | Where to Check in GSC | First Action Step |

|---|---|---|---|

| Blocked by robots.txt | A Disallow rule in your robots.txt file is preventing Google from crawling the page. |

URL Inspection Tool; robots.txt Tester | Remove or modify the blocking rule in your robots.txt file. |

| Page with redirect | The URL is a redirect (301 or 302) to another page, so Google won't index it. | URL Inspection Tool (under "Crawled as") | Verify the redirect is intentional. If so, this is normal behavior. |

| Excluded by ‘noindex’ tag | A meta tag or X-Robots-Tag on the page is explicitly telling Google not to index it. | URL Inspection Tool (under "Indexing allowed?") | Remove the noindex tag from the page's HTML or HTTP header. |

| Not found (404) | The URL returns a 404 error, meaning the page doesn't exist. | Crawl Stats report; URL Inspection Tool | Restore the page if it was deleted by mistake, or implement a 301 redirect. |

| Crawled – currently not indexed | Google crawled the page but decided it wasn't high-quality enough to index. | Page Indexing report; URL Inspection Tool | Review and significantly improve the page's content and user value. |

| Discovered – currently not indexed | Google knows the URL exists but hasn't allocated resources to crawl it yet. | Page Indexing report; Sitemaps report | Improve internal linking to the page and ensure your sitemap is up to date. |

By working through these common issues, you can systematically clear the technical hurdles that are keeping your content hidden.

Two of the most frequent and confusing statuses you'll encounter are "Crawled – currently not indexed" and "Discovered – currently not indexed." They sound almost identical, but they point to completely different problems.

A common mistake is treating all indexing issues the same. "Crawled" means Google has seen your page but decided it wasn't valuable enough to index. "Discovered" means Google knows your page exists but hasn't even gotten around to crawling it yet.

Let's break down what each one really means for you.

What "Crawled – currently not indexed" Means

When you see this, Googlebot has successfully visited your page. The problem is, it made an editorial decision not to add it to the index. This is almost always a sign of a content quality issue.

Google might have decided the page was too thin, duplicated content from another source, or simply didn't provide enough value to users. The fix here isn't technical—it's strategic. You need to roll up your sleeves and improve the page's content, add unique insights, and make sure it serves a clear purpose.

What "Discovered – currently not indexed" Means

This status tells you that Google knows your URL exists (it probably found it in your sitemap or via a link), but it hasn't allocated the resources to actually crawl it yet. This happens for a few common reasons:

- New Site: If your website is brand new, it might not have enough authority or credibility yet to justify frequent crawling.

- Crawl Budget Issues: On very large websites, Google might ration its "crawl budget." If your server is slow or has errors, Google might back off, leaving some pages uncrawled.

- Poor Internal Linking: If the page is an "orphan" with very few internal links pointing to it, Google takes that as a signal that the page isn't very important.

The solution here is to improve the signals you're sending to Google. Make sure your XML sitemap is submitted and error-free, and build a stronger internal linking structure to show which pages matter most. Learning to use GSC this way turns the mystery of an invisible website into a clear, actionable checklist.

Finding Technical Barriers That Hide Your Site

So, your site is all set up in Google Search Console, but you’re still invisible. What gives? Often, the problem lies in a few technical tripwires you might have accidentally set, effectively telling Google to stay away. These issues act like invisible walls, stopping crawlers in their tracks before they even see your great content. A quick mini-technical audit is the best way to tear them down.

I've seen it time and time again: the simplest lines of code can cause the biggest headaches. Finding and fixing these technical stop signs is usually the fastest way to get your site back in Google's good graces. Let's look at the most common culprits I find when a site is indexed but key pages are mysteriously missing.

Uncovering Rogue Noindex Directives

The most common offender, by far, is the ‘noindex’ meta tag. This little snippet of code sits in the <head> section of your page's HTML and gives search engines a direct command: "Do not include this page in your search results." It’s handy for things like internal login pages or post-purchase thank-you pages, but it’s a total disaster if it lands on your homepage or a core service page.

How does this happen? Usually, it's an accident. A classic example is a little checkbox in WordPress settings that says "Discourage search engines from indexing this site." Perfect for when the site is in development, but if that box stays checked after launch, it slaps a noindex tag on every single page.

To see if this is your problem, go to your webpage, right-click, and choose "View Page Source." Then, just hit Ctrl+F (or Cmd+F on a Mac) and search for "noindex." If you spot a line like <meta name="robots" content="noindex, follow">, you’ve found the smoking gun. Getting rid of that line or unchecking the setting in your CMS is the immediate fix.

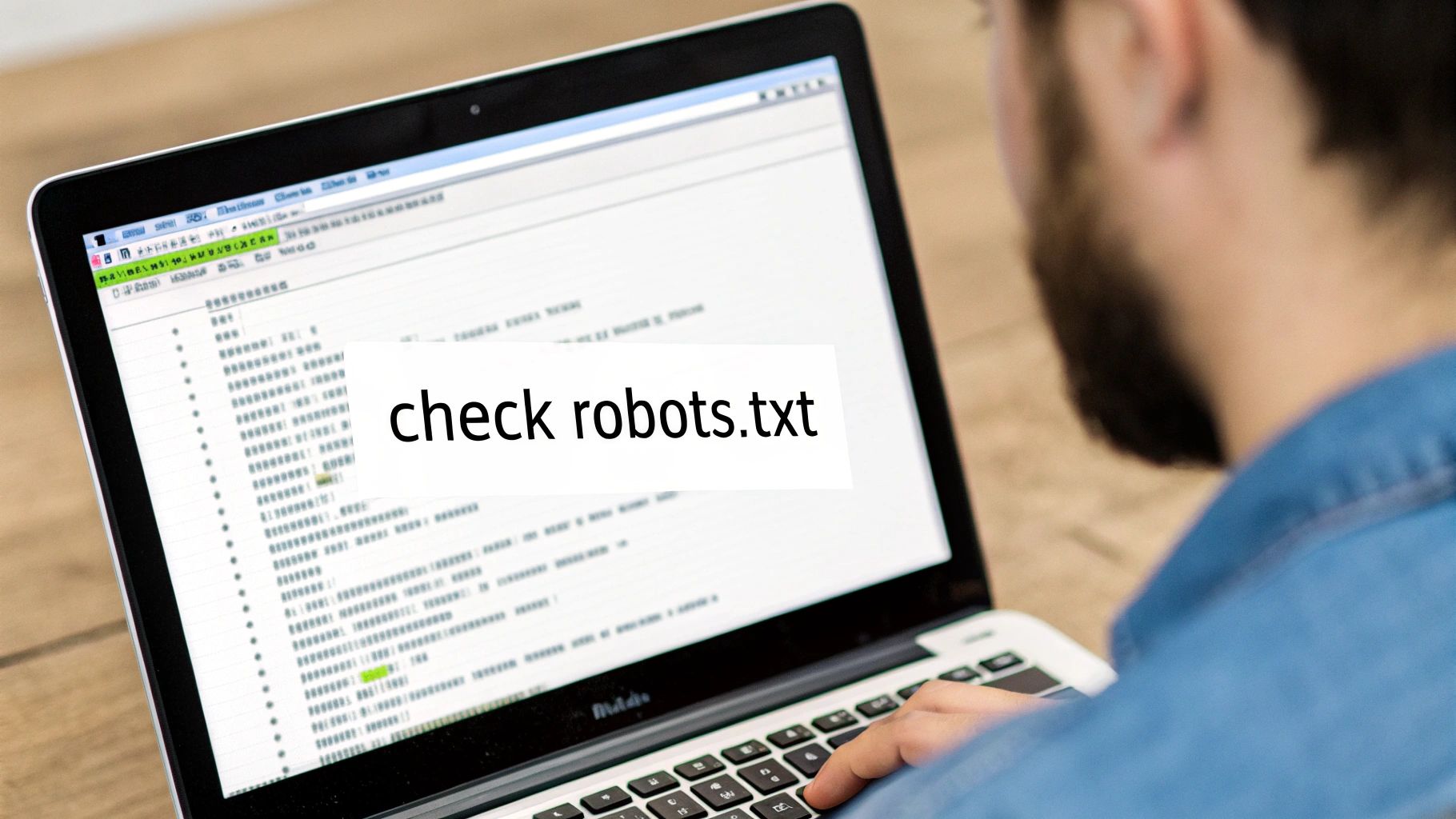

Checking the Power of the Robots.txt File

Every site has a robots.txt file, a simple text file located in the root directory (you can see it at yourdomain.com/robots.txt). Its job is to give web crawlers instructions on which parts of your site they can and can't look at. It's a powerful tool, but one tiny mistake can make your entire site invisible to Google.

The most dangerous line you can find in this file is Disallow: /. That single line tells every search engine bot to ignore your entire website. It's the digital equivalent of putting a giant "Keep Out" sign on your front door.

I once worked with a client whose traffic went to zero overnight. After hours of panic, we found it: a developer had accidentally pushed a

robots.txtfile from a staging site that containedDisallow: /. It's a five-second fix for a problem that can cost you thousands in lost revenue.

Check your own robots.txt by typing your domain followed by /robots.txt into your browser. Keep an eye out for any Disallow rules that might be blocking important pages or entire sections of your site. For a more thorough check, use the robots.txt Tester in Google Search Console—it shows you exactly how Googlebot reads the file and will flag any rules that are blocking access.

Ensuring Your XML Sitemap is Clean and Correct

Think of your XML sitemap as a neatly organized roadmap you hand directly to Google, listing all the important pages on your website. This map helps ensure no key content gets overlooked during the crawl. But if the map is wrong, outdated, or was never even submitted, it’s not doing you any good.

For your sitemap to be effective, it needs to be:

- Submitted to Google Search Console: This is how you officially give the map to Google.

- Free of Errors: GSC is great about reporting issues it finds, like broken links (404s) or URLs that your

robots.txtfile is blocking. - Up-to-Date: The sitemap should automatically add new pages and remove old ones. Thankfully, most modern CMS platforms and SEO plugins handle this for you.

If you're running a more complex website, especially an e-commerce store, you might need to dig deeper. To systematically uncover the technical snags holding you back, following a comprehensive ecommerce SEO audit blueprint can be a game-changer. It helps you look beyond just these basics.

Ultimately, these technical checks are about making sure you haven't put up any unintended roadblocks. Most of these issues boil down to simple human error, especially when multiple people are working on a site or when changes are made without thinking through the SEO impact. If you're working with a team, it pays to know how to choose a web design agency that gets these technical fundamentals from day one. Fixing these technical missteps is a critical first step in solving the mystery of why your website isn't showing up in Google.

Is Your Content Worthy of Ranking?

So far, we’ve been digging into the technical side of things—all the signals that tell Google your site exists and is ready for visitors. But once you clear those technical hurdles, you’re in a whole new ballgame.

Getting indexed is just the entry ticket. It doesn't mean you'll ever show up for a search your customers are actually making. If you've got all the technical boxes checked but your site is still a ghost, it’s time to take a hard, honest look at your content.

This is exactly where so many people get stuck. They fix the code, submit the sitemap, and then… crickets. Why? Because Google’s job isn’t just to find pages; it's to find the best page for whatever someone is looking for.

Moving Beyond Thin Content

Google has a name for pages that don’t make the cut: "thin content."

Don't mistake this for just a low word count. A page can drone on for thousands of words and still be considered "thin" if it offers zero real value. We've all seen them—pages that are just a chaotic mess of keywords, rehashing the same obvious information you’ve read a dozen times elsewhere. They feel like they were written for a robot, not a person.

Those are precisely the pages Google is trying to bury.

To make sure your content isn't one of them, ask yourself some tough questions:

- Does this page offer a unique take or data that can’t be found anywhere else?

- Is my explanation clearer, more detailed, or just plain more helpful than what’s already ranking?

- Does this actually solve the user’s problem, or am I just restating their question?

If the answer to those is "no," then you have a content quality problem. You have to bring more to the table than your competitors. That’s the reality of competing in Google’s world, which holds a staggering 91% market share. For more on that, 9Rooftops.com has some great data.

Are You Answering the User's Real Question?

One of the biggest shifts I’ve seen in my career is the move toward search intent. It’s not about stuffing keywords anymore. It’s about understanding the why behind the search.

Think about it. When someone types something into Google, they have a goal.

Let’s use a simple example:

- "how to fix a leaky faucet" is informational. The searcher wants a step-by-step guide, probably with pictures or a video.

- "best plumber near me" is transactional. They're ready to open their wallet and need reviews, phone numbers, and service info—fast.

- "plumber vs DIY cost" is investigational. They're weighing their options and need a clear comparison of costs, pros, and cons.

If your page is all about your amazing plumbing services, it’s never going to rank for "how to fix a leaky faucet." It’s a complete mismatch for what the user actually needs in that moment.

Your content's success hinges on a simple promise: delivering precisely what the user expects to find when they click. If your page title promises a solution but the content is a sales pitch, users will leave, telling Google your page wasn't a good answer.

Building Credibility with E-E-A-T

For Google to rank your content consistently, it has to see you as a credible source. The framework for this is called E-E-A-T, which stands for Experience, Expertise, Authoritativeness, and Trustworthiness. This isn't just a nice-to-have; it's essential, especially for topics that could impact someone's health, money, or well-being.

Here’s a quick breakdown of how to show it:

- Experience: Prove you've actually done what you're talking about. Use original photos, share case studies, or tell personal stories.

- Expertise: Show off your credentials. Add author bios that list qualifications, certifications, or relevant work history.

- Authoritativeness: Become the go-to source in your niche. This is heavily influenced by getting links and mentions from other well-respected sites.

- Trustworthiness: Make it easy for people to trust you. Have clear contact information, cite your sources, and post your privacy policy.

Creating content that nails E-E-A-T is fundamental. If you're looking for more ways to align your content with your business goals, explore our resources on building a winning digital strategy. More often than not, when a website isn't showing up in Google, the fix isn't some secret technical trick—it's about making your content genuinely helpful and credible.

Investigating Penalties and Security Problems

Alright, so you’ve gone through all the technical checks. Your on-page SEO is tight, your content is solid, but your site is still a ghost on Google. At this point, we need to dig into a couple of less common, but much more serious, possibilities. It's rare, but sometimes a site’s disappearance isn't just an indexing hiccup—it's because Google has either penalized it or flagged it as a security risk.

These are the big ones, the "worst-case scenarios," and we have to rule them out. If your site has been hit with a penalty or compromised by hackers, no amount of keyword research or backlink building will fix the problem. Let’s walk through how to check for these issues without panicking.

Checking for Manual Actions

A manual action is exactly what it sounds like: a real person at Google has reviewed your site and decided it violates their quality guidelines. This isn't an algorithm making a mistake; it’s a direct penalty that can get your pages demoted or completely kicked out of the search results.

The only place to check for this is inside your Google Search Console account. On the left-hand menu, look for the "Security & Manual Actions" tab and click on "Manual actions."

- If you see a green checkmark and "No issues detected," you can breathe a huge sigh of relief. You're in the clear.

- If you see a red flag with a detailed description, you’ve got a penalty. The report will tell you exactly what’s wrong, whether it's for something like unnatural links pointing to your site or having thin content that offers no real value.

A manual action is basically Google putting you in a time-out. They're telling you, "We see what you're doing, we don't like it, and you're out of the index until you clean up your act."

The good news is that Google doesn't leave you in the dark. If you have a penalty, the report will list the affected pages and link to the specific guidelines you violated. Your job is to fix the problem—that might mean disavowing spammy links or completely overhauling your low-quality content. Once you've done the work, you submit a reconsideration request explaining the steps you took.

Identifying Security Issues

The other showstopper that can get your site de-indexed is a security breach. If Google finds out your site has been hacked, is distributing malware, or is being used for phishing, it will act fast to protect searchers. That usually means pulling your site from the results or slapping a big, scary warning on your listings like "This site may be hacked."

Like with manual actions, the Security Issues report in Google Search Console is your go-to source. You’ll find it right under the "Security & Manual Actions" tab.

This report will alert you to a few different types of problems:

- Hacked content: Someone has snuck malicious pages, links, or code onto your site.

- Malware: Your site is trying to install harmful software on your visitors' computers. This is a five-alarm fire.

- Social engineering: Your pages are tricking people into giving up personal information (phishing) or downloading unwanted software.

Finding a security issue is terrifying, I know. But the report gives you a starting point by listing specific URLs that have been compromised. Cleaning up a hacked site is often a job for a professional. You’ll have to find and remove every malicious file, patch the vulnerability that let the attacker in, and then use the report in GSC to request a review from Google. There's no getting around it—if your site isn't showing up because of a hack, this has to be your top priority.

Common Questions About Website Visibility Issues

After you've gone through all the technical checks and content audits, a few nagging questions can still pop up. When your site isn't showing up in Google, the situation can feel frustratingly unique. Let's tackle some of the most common "what if" scenarios I see all the time and get you some straight answers.

Think of this as the troubleshooting FAQ I'd give a client. These are real-world problems that come up during site launches, redesigns, and even routine updates, and each one needs its own game plan.

How Long Does It Take for a New Website to Show Up on Google?

There's no magic number here, but you're typically looking at a window of a few days to several weeks. Before anything else can happen, Google has to "discover" your site. This usually happens when its crawlers follow a link from another website or find your URL in a sitemap you've submitted.

Want to speed things up? You absolutely can. The single most effective thing you can do is submit an XML sitemap directly through Google Search Console. Getting a link from a reputable, already-indexed site also gives Googlebot a shortcut to finding you.

Just remember, getting indexed is only the starting line. Earning good rankings for keywords that matter is a marathon of building authority and relevance over time, not a sprint.

My Website Was on Google but Has Now Disappeared. What Happened?

Seeing your site vanish from search results is alarming. It almost always means something has actively changed, either on your site or in how Google sees it.

More often than not, a sudden disappearance points to a few usual suspects. First, hunt for an accidental 'noindex' tag. I've seen these get added by mistake during a theme change or plugin update more times than I can count. Next, open up your robots.txt file and look for any new 'Disallow' rules that might be blocking access to important pages—or even your entire site.

A sudden drop-off is almost always a technical issue or a penalty. Your first move should be to use the URL Inspection Tool on your homepage. It provides a direct diagnosis from Google and is the fastest way to find the root cause.

It's also crucial to check the 'Manual Actions' and 'Security Issues' reports in Google Search Console. If Google has penalized your site, this is where you'll find out. Lastly, persistent server downtime can cause Google to temporarily drop your pages if it can't reach your site after several attempts.

Will Changing My Domain Name Make My Website Vanish from Google?

Yes, it absolutely will—if you don't handle the migration with extreme care. To Google, a new domain is a brand-new website with zero history, authority, or trust. You're essentially starting over from scratch unless you give Google a crystal-clear roadmap of what happened.

To transfer all your hard-earned authority, you have to set up permanent 301 redirects from every single old URL to its direct counterpart on the new domain. This is a page-by-page mapping, not just a simple redirect from the old homepage to the new one.

Once that's done, use the 'Change of Address' tool in Google Search Console for your old site to officially tell Google about the move. Skipping these steps is a guaranteed way to lose all your rankings and organic traffic. A well-planned migration is the only way to minimize the damage and ensure a smooth transition.

Why Is Only My Homepage Indexed but None of My Other Pages?

This is a classic. It almost always points to a problem with either crawling or your internal linking. If Google can find your homepage but sees no clear paths to your other pages, it simply won't know they exist. Your internal pages effectively become digital islands.

The fix usually starts with making sure your most important pages are linked directly from your main navigation menu. Your internal linking structure acts like a series of signposts, guiding both users and crawlers through your site.

Here’s a quick checklist to diagnose this:

- Check Internal Links: Are your key product or service pages linked from your primary navigation or from within your homepage content?

- Review Your Sitemap: Make sure your XML sitemap is complete, error-free, and has been successfully submitted in Google Search Console. It needs to include every page you want indexed.

- Inspect Individual URLs: Grab a few of your missing internal pages and run them through the URL Inspection Tool. Look for rogue 'noindex' tags or

robots.txtblocks that might be stopping them.

Solving this often comes down to creating logical pathways through your website so Google can easily discover the full scope of your content.

At Sugar Pixels, we believe building a powerful online presence shouldn't be a source of stress. Our team handles the complex technical details, from web design and SEO to reliable hosting, so you can focus on running your business. Visit us at https://www.sugarpixels.com to see how we can make your website work for you.